36 Training for Robustness and Generality

36.1 Introduction

In the previous chapter, we saw that performance of a vision system can look quite good on a training set but fail dramatically when deployed in the real world, and the reason is often distribution shift. How can we train models that are more robust to these kinds of shifts?

This chapter presents several strategies that all have the same goal: broaden the training distribution so that the test data just looks like more of the training data. The following sections present two ways of doing this.

36.2 Data Augmentation

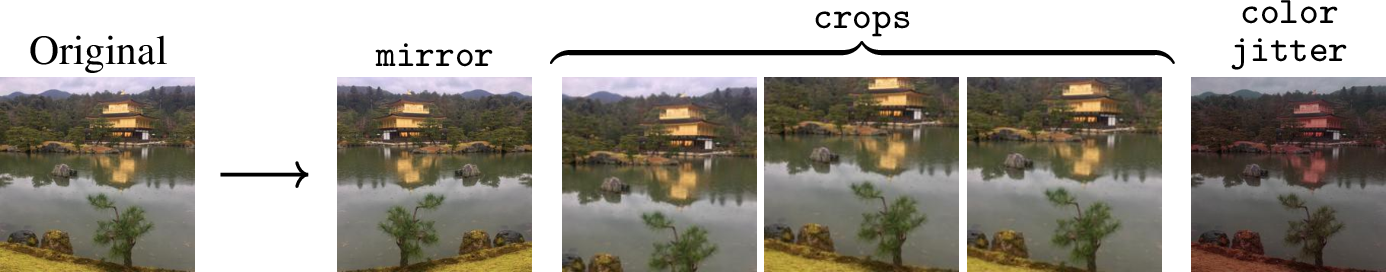

augments a dataset by adding random transformations of each datapoint. For example, below we show some common data augmentations applied to an example image (Figure 36.1):

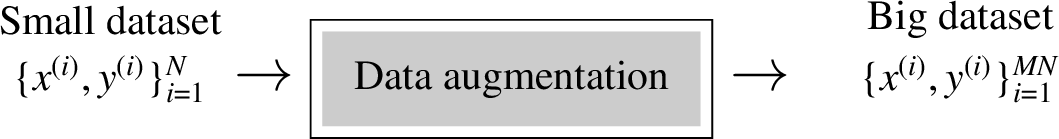

When you do this for every image in a dataset, you are essentially mapping from a smaller dataset to a larger dataset. We know that more data is usually better, so this is a way to simulate the effect of adding more real data to your dataset.

Data augmentation might not seem not seem very glamorous, but it is one of the most important tools at our disposal. Machine learning is data-driven intelligence, and the bigger the data the better. Data augmentation is like a philosopher’s stone of machine learning: it converts lead (small data) into gold (big data).

The way data augmentation works is that for each \(\{\mathbf{x}^{(i)}, \mathbf{y}^{(i)}\}\) pair in your original dataset, you add \(M\) new synthetic pairs \(\{T(\mathbf{x}^{(i)};\theta^{(j)}), \mathbf{y}^{(i)}\}_{j=1}^M\), where \(T\) is a data transformation function with stochastic parameters \(\theta \sim p_{\theta}\). As an example, \(T\) might be the crop function, and then \(\theta\) would be a set of coordinates specifying where to apply the crop. We would randomly select different coordinates for each of the \(M\) crops we generate.

Notice that we did not apply any transformation to the \(\mathbf{y}^{(i)}\) values. The assumption we are making is that the target outputs \(\mathbf{y}\) are invariant to the augmentations of the inputs \(\mathbf{x}\). Let \(y(\mathbf{x})\) be the true \(\mathbf{y}\) for an input \(\mathbf{x}\); then we are assuming that, \[\begin{aligned} y(\mathbf{x}) = y(T(\mathbf{x},\theta)) \quad \forall \theta \quad\quad \triangleleft \quad\text{$y$ invariant to $T$} \end{aligned}\] For example, if our task is scene classification then we would want to only apply augmentations that do not affect the class label of the scene. We could use mirror augmentation, since the mirroring a scene does not change its class. In contrast, if the task were optical character recognition, then mirror augmentation would not be a good idea. That’s because the mirror image of the character b looks just like the character d. Character recognition is not mirror invariant. In other words, for the task of character recognition, we do not have that \(y(\texttt{mirror}(\mathbf{x})) = y(\mathbf{x})\). The same is true for molecular data that exhibits so-called chiral structure: a molecule may behave very differently from its mirror image.

To handle the case where \(y(\mathbf{x}) \neq y(T(\mathbf{x},\theta))\), we may use a more advanced kind of data augmentation, where we transform the \(\mathbf{y}\) values at the same time as we transform the \(\mathbf{x}\) values, using transforms \(T_{\mathcal{X}}\) and \(T_{\mathcal{Y}}\). This results in an augmented dataset of the form \(\{T_{\mathcal{X}}(\mathbf{x}^{(i)};\theta^{(j)}), T_{\mathcal{Y}}(\mathbf{y}^{(i)};\theta^{(j)})\}_{j=1}^M\). For this to make sense, we require that \(T_{\mathcal{X}}\) and \(T_{\mathcal{Y}}\) express an equivariance between the two domains, which means, \[\begin{aligned} T_{\mathcal{Y}}(y(x),\theta_{\mathcal{Y}}) = y(T_{\mathcal{X}}(x,\theta_{\mathcal{X}})) \quad \forall \theta \quad\quad \triangleleft \quad\text{$y$ equivariant with respect to $T_\mathcal{X}, T_\mathcal{Y}$} \end{aligned}\] This kind of data augmentation is standard in settings where \(\mathbf{y}\) has spatial or temporal structure that is aligned with the structure in \(\mathbf{x}\), like if \(\mathbf{x}\) is an image and \(\mathbf{y}\) is a label map as in the problem of semantic segmentation. Then, if we do random crop augmentation, we need to make sure to apply the same crops to both \(\mathbf{x}\) and \(\mathbf{y}\): \(\{\texttt{crop}(\mathbf{x}^{(i)};\theta^{(j)}), \texttt{crop}(\mathbf{y}^{(i)};\theta^{(j)})\}_{j=1}^M\).

Sometimes our data is not sampled from a static dataset but instead is generated by a simulator. In this setting we may apply an idea analogous to data augmentation called . Here, rather than adding random transformations to points in a dataset, we instead randomize the parameters of the simulator, so that each interaction with the simulation will look slightly different, for example, the lighting conditions may be randomized. The effect is the same as with data augmentation: you convert a narrow set of experiences (the simulation with one kind of lighting condition) to a much broader set of experiences to learn from (i.e., the simulation with many different lighting conditions).

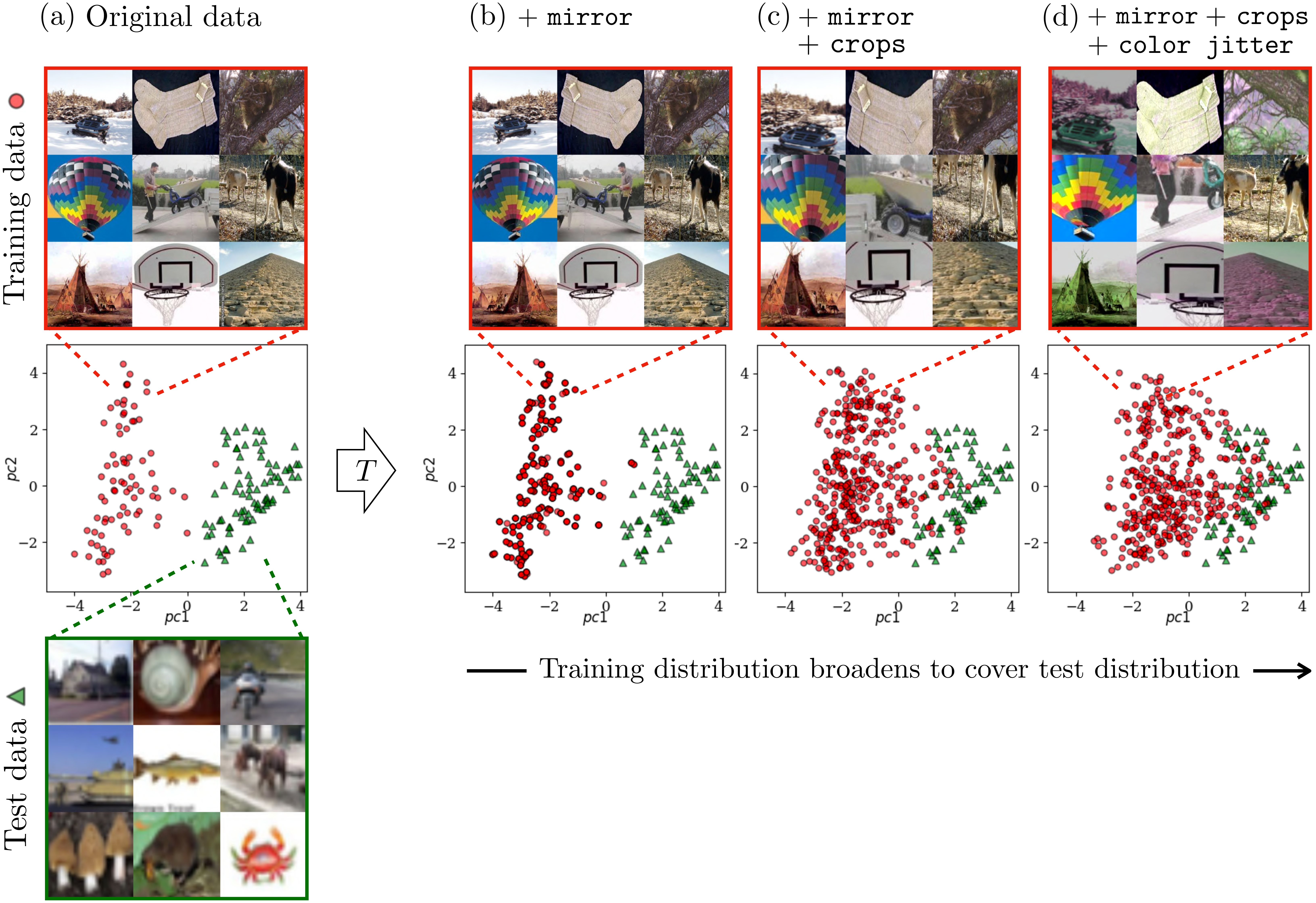

Why does data augmentation work? It broadens the distribution of training data, and this can reduce the gap between the training data and the test data. This effect is illustrated in Figure 36.2. In this example, we have training image from one dataset (Caltech256 [1]) and test images from a different dataset (CIFAR100 [2]). The test images look quite a bit different from the training data; they are lower resolution and contain a different set of object categories. We say that the test data are out-of-distribution relative to the training data. Data augmentation adds variation to the training data, which broadens the training distribution. The result is that the test data become more in-distribution, compared to the training data. If we apply enough data augmentation, then the test data will look just like more random samples from the training distribution!

mirror augmentations (random horizontal flips). (c) The same plus crops (crop then rescale to the original size). (d) The same plus color jitter (random shifts in color and contrast).

36.2.1 Learned Data Augmentation

Simple transformations like mirror flipping or cropping can be very effective while also being easy to implement by hand. After learning, the model will be invariant (or sometimes equivariant, if the \(\mathbf{y}\) values are transformed too) with respect to these transformations. But these are just a few of all possible transformations that we might want to be invariant to. Imagine if we are building a pedestrian detector. Then we would probably like it to be invariant to pose variation in the pedestrian. This can be achieved by augmenting our data with random pose variations. But to do so may be very hard to code by hand. Instead we can use learning algorithms to learn how to augment our data and achieve the invariances we would like.

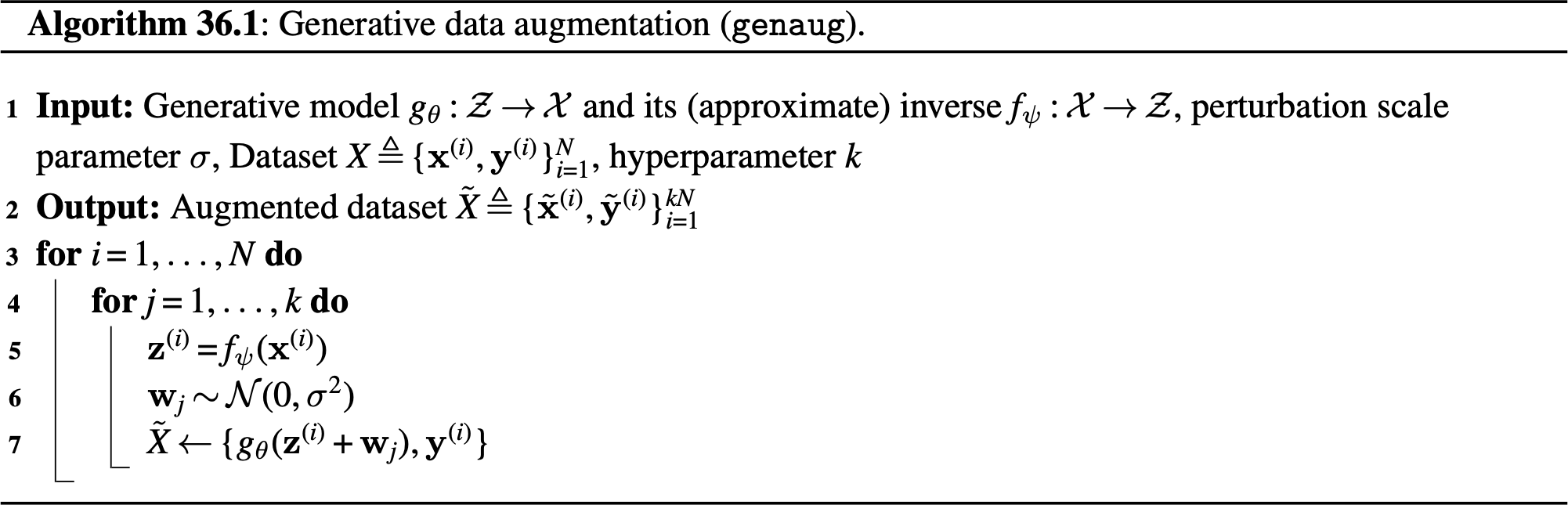

One way to do this is to use generative models. Latent variable generative models like generative adversarial networks (GANs) or variational autoencoders (VAEs) are especially suitable, because the latent variables can be used to control different factors of variation in the generated data (see Chapter 33). Given a datapoint \(\mathbf{x}\), an encoder \(f_{\phi}: \mathcal{X} \rightarrow \mathcal{Z}\), and a generator \(g_{\theta}: \mathcal{Z} \rightarrow \mathcal{X}\), we can create an augmented copy of \(\mathbf{x}\) as, \[\begin{aligned} \mathbf{x} = g_{\theta}(f_{\psi}(\mathbf{x})+\mathbf{w}) \quad\quad \triangleleft \quad\text{generative data augmentation via latent manipulation} \end{aligned}\] where \(\mathbf{w}\) is a small perturbation to the latent variable \(\mathbf{z}\).

GANs do not necessarily come with encoders \(f_{\psi}\), but for applications that need one, like we have here, you can simply train an encoder via supervised learning on pairs \(\{g_{\theta}(\mathbf{z}), \mathbf{z}\}\), solving \(\arg\min_{\psi} \mathbb{E}_z [\mathcal{L}(f_{\psi}(g_{\theta}(\mathbf{z})), \mathbf{z})]\). See [4] for discussion of this method and more advanced techniques.

If we do this for a variety of settings of \(\mathbf{w}\), then we will get a set of output images that are all slight variations of one another, just like we saw previously in Figure 33.15. One simple approach is to just use Gaussian perturbations in latent space, that is, \(\mathbf{w} \sim \mathcal{N}(0,\sigma^2)\) for some perturbation scale given by \(\sigma\). Algorithm 36.1 formalizes this approach (see [5] for a representative work that roughly follows this recipe, or [6] for an alternative approach to generative data augmentation).

There are some subtleties when working with learned data augmentation. You might be tempted to train \(g_{\theta}\) on the same dataset you are trying to augment, that is, train \(g_{\theta}\) on \(X\), then use \(g_{\theta}\) to augment \(X\). This can have interesting regularizing effects, but it introduces an uncomfortable circularity, which can be understood as follows. We want to create augmented data for training some function \(f\). Let the learner for \(f\) be denoted as the function \(L\), which is a mapping from input data to output learned function \(f\).

It can be confusing to think of a learning algorithm as a function, but that’s what it is; as we pointed out in Chapter 9, a learner is a function that outputs a function.

Without data augmentation we have \(f = L(X)\), and with data augmentation we have \(f = L(\tilde{X})\). But now, when \(g_{\theta}\) is trained on \(X\), then \(\tilde{X}\) is just a function of \(X\); in particular, if we use algorithm Algorithm 36.1, it is \(\tilde{X} = \texttt{genaug}(X)\). So, we can define a new learner \(\tilde{L} = L \circ \texttt{genaug}\) where we have \(f = \tilde{L}(X) = L(\texttt{genaug}(X)) = L(\tilde{X})\). This demonstrates that there exists a learning algorithm, \(\tilde{L}\), that uses unaugmented data and is just as good as our learning algorithm that used augmented data.

A clearer gain can be achieved by using a \(g_{\theta}\) pretrained on external data. For example, if we have a general purpose image generative model, trained on millions of images (such as [7] or [8]), then we can use it to augment a small dataset for a new specific task we come across.

36.3 Adversarial Training

With data augmentation, the learning problem looks like this: \[\begin{aligned} \mathop{\mathrm{arg\,min}}_f \mathbb{E}_{\mathbf{x},\mathbf{y}} [\mathbb{E}_{\theta \sim p_{\theta}}[\mathcal{L}(f(T(\mathbf{x};\theta)),\mathbf{y})]] \end{aligned}\] Here we are applying random transformations to the data, and we want our learner to do well in expectation over these random transformations. Instead of doing random transformations, we could instead consider augmenting with worst case transformations, applying those transformations that incur the highest loss possible for a given training example: \[\begin{aligned} \mathop{\mathrm{arg\,min}}_f \mathbb{E}_{\mathbf{x},\mathbf{y}} [\max_{\theta \in \Theta}[\mathcal{L}(f(T(\mathbf{x};\theta)),\mathbf{y})]] \end{aligned} \tag{36.1}\]

This idea is known as . One of its effects is that it can increase the robustness of the learned function \(f\) to perturbations that may occur at test time (like those we saw in the previous chapter).

In the context of adversarial robustness, one form of Equation 36.1 is to set \(T\) to be an epsilon-ball attack, that is, \(T(\mathbf{x}) = \mathbf{x}+\mathbf{\epsilon}\): \[\begin{aligned} \mathop{\mathrm{arg\,min}}_f \mathbb{E}_{\mathbf{x},\mathbf{y}} [\max_{\mathbf{\epsilon} \, s.t. \left\lVert\mathbf{\epsilon}\right\rVert < r}[\mathcal{L}(f(\mathbf{x}+\mathbf{\epsilon}),\mathbf{y})]] \end{aligned} \tag{36.2}\]

This can increase robustness against the kinds of epsilon-ball attacks we saw in the previous chapter.

Adversarial training has many other uses as well, beyond the scope of the current chapter- it is used for training generative adversarial networks (Chapter 32), for training agents to explore in reinforcement learning [9], and for training agents that compete against other agents [10]. This general approach is also known as robust optimization in the optimization literature.

36.4 Toward General-Purpose Vision Models

The lesson from this chapter is that if you train on more and more data, you can achieve better coverage over all the test cases you might encounter. This raises a natural question: Rather than inventing new augmentation and robustness methods, why not just collect ever more data? This approach is becoming increasingly dominant, and a major focus of current efforts is on just scaling the training data size and diversity.

36.4.1 Multitask training

Because we have finite data for any given task, it can help to share data between tasks. Suppose we have two tasks, \(A\) and \(B\). How can we borrow data from task \(B\) to increase the data available for task \(A\)? In line with the theme of this chapter, one approach is to not just broaden the data but to also broaden the task. The idea is to define a new task, \(AB\), for which tasks \(A\) and \(B\) are special cases. Then we can train \(AB\) on both the data for task \(A\) and for task \(B\). An example of a metatask like \(AB\) is data imputation (see Chapter 33). One example of data imputation is to predict missing color channels; this is the colorization problem, call this task \(A\). Another data imputation problem is predicting a chunk of pixels masked out of the image; this is the inpainting problem, which we can call task \(B\). We can train a joint colorization–inpainting model if we simply set up both as data imputation and train a general data imputation model. This model can be trained on both colorization training data and inpainting training data.

Data imputation is an incredibly general framework. Can you think of other vision problems that can be formulated as data imputation? Or, here is a harder question: Can you think of any vision problems that cannot be formulated this way?

In the next chapter, we will see several more examples of how to make use of data from task \(B\) for task \(A\).

36.5 Concluding Remarks

A grand challenge of computer vision is to make algorithms that work in general, no matter the setting in which you deploy them. For example, we would like object detectors that will recognize a cat on the internet, a cat in your house, a cat on Mars, an upside down cat, and so on. One of the simplest ways to achieve this is to train for generality: train your system on as much data, as diverse data, and as many tasks as possible. This approach is, perhaps, the main reason we now have computer vision systems that really work in practice, whereas a decade ago we didn’t.